编译nwchem时,我用了如下环境变量设置

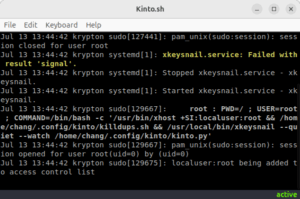

nwchem的编译过程可以参照nwchem的官方手册,但在编译完成后我始终无法进入调试模式,经过摸索,发现如下环境变量设置可以使编译出来的nwchem可以进入编译模式(回来再太久究竟是哪里做对了)

export NWCHEM_TOP=<PATH-TO-NWCHEM>

export NWCHEM_TARGET=LINUX64

export NWCHEM_MODULES=smallqm

export USE_NOFSCHECK=TRUE

export USE_NOIO=TRUE

export BUILD_OPENBLAS=y

export BLAS_SIZE=4

export LAPACK_SIZE=4

export USE_64TO32=y

export USE_SERIALEIGENSOLVERS=y

export USE_MPI=n

export USE_MPIF=n

export USE_ARUR=n

export USE_HWOPT=n

#export ARMCI_NETWORK=MPI-PR

export FC=gfortran

export FDEBUG="-g -O0"

export CDEBUG="-g -O0"

export USE_DEBUG=y

vscode中,为了方便调试,添加launch.json文件

{

// Use IntelliSense to learn about possible attributes.

// Hover to view descriptions of existing attributes.

// For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "input file",

"type": "cppdbg",

"request": "launch",

"program": "${workspaceFolder}/bin/LINUX64/nwchem",

"args": ["${file}"],

"stopAtEntry": false,

"cwd": "${fileDirname}",

"environment": [],

"externalConsole": false,

"MIMode": "gdb",

"setupCommands": [

{

"description": "Enable pretty-printing for gdb",

"text": "-enable-pretty-printing",

"ignoreFailures": true

}

]

}

]

}

津公网安备12010402001491号

津公网安备12010402001491号